Artificial intelligence (AI) is only just getting started and is set to ramp up innovation across a range of different industries. As internal or industry specific AI tools become increasingly available, a new challenge emerges: can you trust it?

The effectiveness of an AI application hinges entirely on the quality and reliability of the data it is trained on. The more AI is used to automate, enhance and augment, the more important the conversation surrounding data transparency and veracity becomes.

Recently, the Data & Trust Alliance introduced data provenance standards aimed at enhancing the trustworthiness of AI and AI enhanced products. These standards aim to highlight the importance of understanding the origin, age, sensitivity, and reliability of data, providing transparency crucial for the rapidly growing number of AI applications.

Central to this transparency is the concept of data provenance, which captures the journey of data within an organization, offering insights into its quality, security, and validity. By harnessing information about the data in use, enterprises can identify and rectify potential bottlenecks, inconsistencies, or inaccuracies in the data pipeline, therefore enhancing the accuracy and efficiency of AI products. Conversely, without clear data provenance and veracity indicators, trust in AI models diminishes, impeding success.

At IndyKite, we are incredibly focussed on data visibility, transparency and how data can be used to drive value. We have long believed that identity tooling and identity data is underutilized by modern enterprises, and with some fresh thinking, can contribute innovative solutions to this ‘data trust’ challenge.

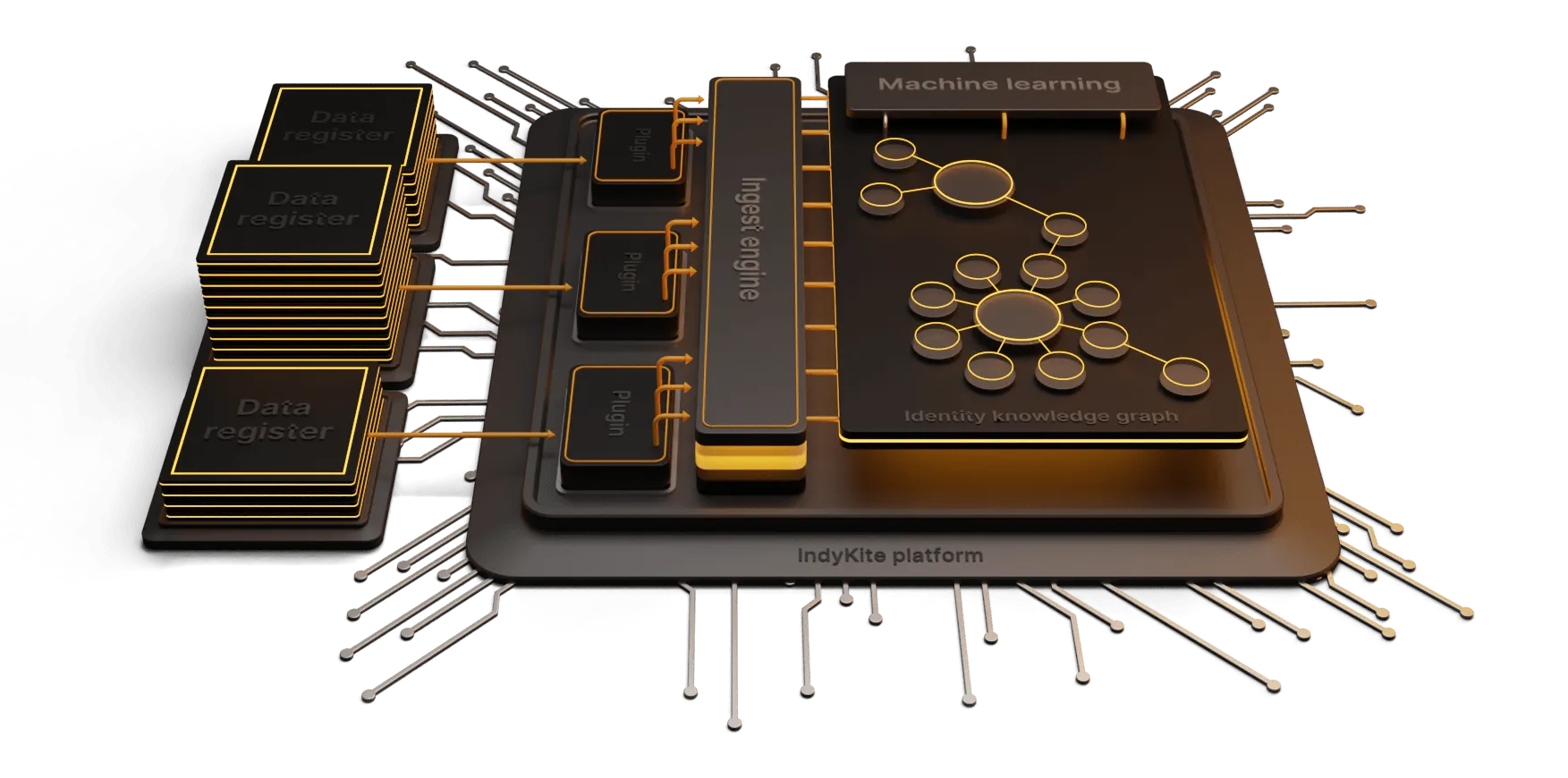

By leveraging an identity-centric approach, data provenance, context and risk attributes can be captured and utilized in a novel way. This approach considers applications, digital entities, and data points as "identities," capturing pertinent information to adhere to emerging digital trust standards. Crucially, this approach enables sensitive data to be safeguarded, ensuring compliance with privacy regulations.

Getting started toward greater trust in your AI-enhanced applications does not need to be an arduous process or require significant overhauling of the tech you already have in place. With the right tooling, you can leverage your data as it is, from where it is, without a huge investment.

The magic is in how you approach the challenge and how you think about your data.

With IndyKite, you can leverage a flexible data model, allowing you to start small and evolve as you scale, without introducing complexity or compromising existing application logic. From here you can create your unified data layer, enriched with provenance metadata and relationships. You can leverage this layer to start to drive intelligent access decisions, enhance your application logic, gain deeper data insights in your analytics or power your AI and AI-enhanced applications.

We are right at the start of seeing all that AI can offer, and we are also only scraping the surface of what can be achieved with identity-centric data veracity. This next wave of AI must not just look at what AI can enable but how we can better enable AI.

Learn more in the E-guide: Identity-powered AI.